Notes from the “Energy and Performance” session at OOPSLA

Last week I went to Portland to catch the part of the SPLASH1 conference known as OOPSLA. I attended fourteen2 OOPSLA talks, often paying attention!

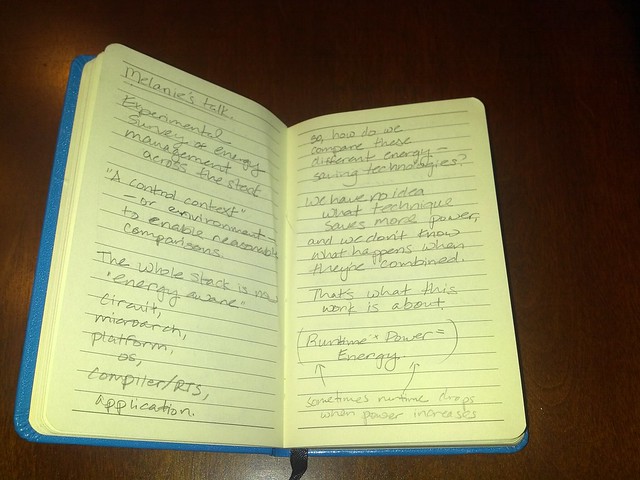

I arrived at OOPSLA knowing nothing about energy management, but went to the “Energy and Performance” session hoping to learn something, since it’s a favorite topic of some of my colleagues. It ended up being my favorite session of the conference. I especially liked Melanie Kambadur’s talk on her paper “An Experimental Survey of Energy Management Across the Stack”, co-authored by Martha Kim. It was from listening to Melanie’s talk that I came to understand why energy management is not to be confused with power management.

Power is the rate of doing work, or, equivalently, the rate of energy consumption. For instance, the joule is a unit of energy, and the fancy lightbulb in the lamp next to me is using energy at the rate of thirteen joules per second, also known as thirteen watts – a unit of power. If I leave it on for an hour, that’s 3600 seconds, so it’ll use 46,800 joules in that time – but it’ll still be using them at the rate of thirteen watts.

Since power = energy / time, it’s also the case that power * time = energy. (You can multiply a unit of power by a unit of time and get a unit of energy. Indeed, you can check my arithmetic above by asking Google how many watt-hours 46,800 joules is: it’s thirteen.) That means that we have two opportunities to improve the energy performance of, say, a computer that has to do a certain task: we can decrease the amount of power it needs to use to do the task, or we can decrease the time that the task takes to do. We have to be careful, though: if we decrease power, we might increase the amount of time that the task takes and therefore never get any actual energy savings. On the other hand, if we reduce the time that a computation takes (say, by parallelizing it to run on multiple cores), we might also increase power consumption (say, because more cores are active) and again kill our energy savings.

As Melanie explained in her talk, the whole computing stack is now “energy-aware”: energy-saving technologies turn up at every level of the stack, from the circuit level, to the microarchitecture, to the platform, to the OS, to the compiler and runtime system, to the application level. But how do we compare these different energy-saving technologies? We have no idea how to compare two approaches that operate at different levels of the stack. Nor do we know if combining two such approaches is a good idea, since it’s not obvious what happens when they’re combined; they could cancel each other out, or worse. The purpose of Melanie and her advisor’s work, then, was to create a standard, controlled environment in which different combinations of energy-saving approaches can be compared reasonably and broad conclusions can be drawn. They then tested thousands of combinations of energy-saving technologies in this controlled environment.

They found that adding parallel resources and turning on compiler optimizations tended to decrease program runtime enough to overtake the corresponding power increase, so those approaches were consistent energy savers. On the other hand, they found that frequency tuning – that is, the practice of lowering the frequency at which a processor runs during periods of low work – tended to only save power, not energy. That’s what I got from listening to the talk, but it only scratches the surface – there are lots more details in their paper.

The three other talks in the session were all interesting as well; I want to mention in particular Gustavo Pinto’s talk on “Understanding Energy Behaviors of Thread Management Constructs”. He and his coauthors looked specifically at the compiler and application level, and in fact specifically at the behavior of applications running on the JVM. They observed that as they increased the number of threads with which they ran a program, its energy consumption would first increase, then decrease, since as runtime got shorter, the time savings would eventually overtake the corresponding power increase. This was a good illustration of the trade-off between power and time consumption, and I thought it complemented Melanie’s results nicely.

-

Fittingly, it rained the entire time. ↩

-

There were fifty-two accepted OOPSLA papers, but since the fifty-two talks were split over two tracks (making it so that I could have attended a maximum of twenty-six talks), and since a fair number of those took place after I had to leave for the airport, I think fourteen of fifty-two ain’t bad. ↩

Comments